In today’s digital age, Connectors and Interfaces are essential in shaping how we interact with technology. From mobile apps and websites to smart devices and industrial systems, interfaces and connectors bridge users with the digital and physical worlds. Among these, types of wire connectors play a crucial role in ensuring secure and efficient physical connections within devices, enabling smooth data and power transmission that supports our digital experiences.

In this blog post, we delve into the world of connectors and interfaces, examining various types and their impact on user interactions. Whether you’re a designer, developer, or simply curious about the inner workings of digital and electronic systems, this guide provides insights into the types of interfaces and wire connectors and their unique functions.

We’ll begin by defining what an interface is, why it’s important in technology, and how connectors, including types of wire connectors, support these systems. As we explore the landscape of interfaces, we’ll discuss their strengths, limitations, and best practices in design and application.

Throughout this post, we’ll cover a range of interface types, including graphical user interfaces (GUIs), command-line interfaces (CLIs), voice interfaces, touch interfaces, and advanced interfaces like augmented and virtual reality (AR/VR). Additionally, we’ll examine the types of wire connectors that enable secure, reliable connections within these devices, supporting both power and data transfer.

Furthermore, we’ll look at emerging trends in interface and connector design, such as conversational interfaces powered by natural language processing and gesture-based interfaces enabled by machine learning. These innovations, alongside advancements in wire connectors, create new and exciting ways for users to interact with technology safely and efficiently.

By understanding the different types of interfaces and wire connectors and the principles behind their design, you’ll gain valuable knowledge to enhance your projects. As a user, you’ll also gain a deeper appreciation for the thought and effort that goes into creating interfaces and connectors that keep you seamlessly connected to the digital and physical worlds.

So, whether you’re a curious learner or a seasoned professional, join us on this journey through types of interfaces and wire connectors to discover how they shape the experiences we encounter every day. Let’s dive in and uncover the secrets to designing and implementing interfaces and connectors that captivate, engage, and empower users.

Achieve enhanced efficiency with LinkSemi’s Excess Electronic Components Solution, designed to optimize inventory sales and reduce excess costs. Our AI-powered insights and automated workflows provide significant Cost Savings in Electronic Component procurement. Furthermore, our advanced Electronic Component Shortage Module helps address supply chain disruptions, ensuring seamless production and uninterrupted operational efficiency.

Different Types of Interfaces

Here are just some of the many types of interfaces that exist, each with its unique characteristics and applications in various domains:

- Graphical User Interfaces (GUIs)

- Command-Line Interfaces (CLIs)

- Voice Interfaces

- Touch Interfaces

- Augmented Reality (AR) and Virtual Reality (VR) Interfaces

- Conversational Interfaces

- Gesture-Based Interfaces

- Natural Language Processing Interfaces

- Machine Learning and Computer Vision Interfaces

- Wearable Interfaces

- Haptic Interfaces

- Brain-Computer Interfaces (BCIs)

- Biometric Interfaces

- Web Interfaces

- Mobile Interfaces

- Desktop Interfaces

- Game Interfaces

- Automotive Interfaces

- Industrial Interfaces

- Internet of Things (IoT) Interfaces

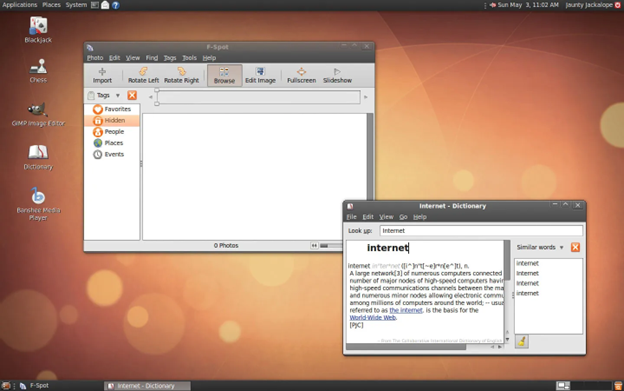

Graphical User Interfaces (GUIs)

Graphical User Interfaces (GUIs) revolutionized the way users interact with computers by providing intuitive visual representations and interactive elements. GUIs have become the predominant interface type for most software applications, operating systems, and websites. They combine visual elements, such as icons, menus, and buttons, with user-friendly interactions, enabling users to navigate, manipulate, and control digital content with ease.

Definition and Characteristics

- GUIs are visual interfaces that use graphical elements, including icons, windows, buttons, and menus, to represent and manipulate digital information.

- They provide a visual metaphor that simulates real-world objects and actions, making interactions more intuitive for users.

- GUIs offer a combination of direct manipulation and indirect manipulation techniques, allowing users to interact with elements directly on the screen or through input devices like a mouse or touchpad.

Key Components of GUIs

- Icons: Represent visual symbols that represent specific functions, applications, or files. Clicking or tapping on an icon typically triggers an associated action.

- Windows: Serve as containers for displaying and organizing content, allowing users to view and interact with multiple application interfaces simultaneously.

- Menus: Provide a hierarchical list of commands or options, accessible through dropdown menus or menu bars, enabling users to perform specific actions or access various features.

- Buttons: Represent interactive elements that users can click or tap to initiate specific actions or trigger specific functionalities.

- Text Input Fields: Allow users to enter text or data, such as in search bars, forms, or text editors.

- Dialog Boxes: Pop-up windows that prompt users for input, display messages, or provide options for decision-making.

Advantages of GUIs

- Ease of Use: GUIs make it easier for users to learn and navigate applications, reducing the learning curve.

- Visual Representation: GUIs use visual cues and icons to represent complex operations and functionalities, aiding user understanding.

- WYSIWYG (What You See Is What You Get): GUIs provide immediate visual feedback, allowing users to see the impact of their actions in real-time.

- Multitasking: GUIs enable users to work with multiple windows or applications concurrently, improving productivity.

- Accessibility: GUIs offer features like resizable text, color contrast adjustments, and assistive technologies to enhance accessibility for users with disabilities.

Design Considerations

- Consistency: Maintaining consistent visual elements, layout, and interaction patterns across an application or system to enhance user familiarity.

- User-Centered Design: Conducting user research and usability testing to ensure the GUI meets the needs and expectations of the target audience.

- Visual Hierarchy: Prioritizing important elements, providing clear visual cues, and using appropriate typography and color schemes to guide user attention.

- Responsiveness: Designing GUIs that adapt to different screen sizes and devices to ensure a seamless experience across platforms.

Graphical User Interfaces have played a significant role in shaping modern digital experiences, making technology more accessible and user-friendly. Their intuitive visual representations and interactive nature have transformed the way we interact with software applications and systems, providing a foundation for countless digital innovations.

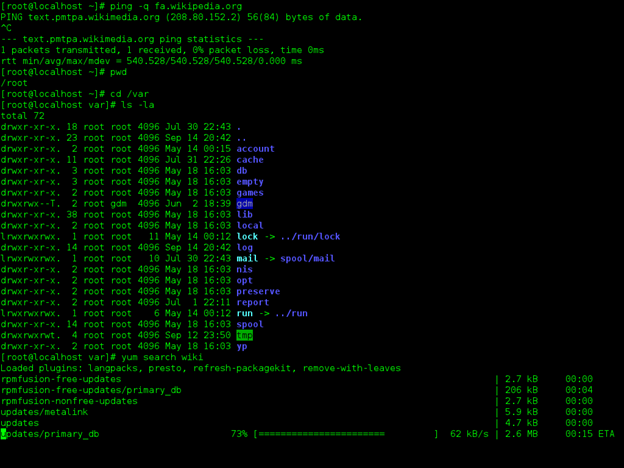

Command-Line Interfaces (CLIs)

Command-Line Interfaces (CLIs) are text-based interfaces that allow users to interact with a computer or software application by typing commands. Unlike Graphical User Interfaces (GUIs) that rely on visual elements and direct manipulation, CLIs operate primarily through textual commands and responses. While they may seem archaic compared to GUIs, CLIs offer power, efficiency, and flexibility, making them popular among developers, system administrators, and advanced users.

Definition and Characteristics

- CLIs are text-based interfaces that operate through a command prompt or terminal.

- Users interact with CLIs by typing specific commands and arguments, which are executed by the system or application.

- – Responses to commands are displayed as text output, providing feedback, information, or error messages.

Key Components of CLIs

- Command Prompt/Terminal: The text-based environment where users enter commands.

- Commands: Textual instructions that users enter to perform specific actions or operations.

- Arguments: Additional parameters are provided alongside commands to modify their behavior or specify targets.

- Command Syntax: The specific format and structure that commands must follow for proper execution.

- Output: Text-based responses or feedback from the system or application after executing a command.

Advantages of CLIs

- Efficiency and Speed: CLIs allow users to perform complex tasks quickly by entering concise commands instead of navigating through graphical interfaces.

- Flexibility and Control: CLIs provide granular control over system operations and configurations, enabling advanced users to customize and automate workflows.

- Scripting and Automation: CLIs are well-suited for scripting and automation tasks, allowing users to create powerful scripts to perform repetitive tasks or complex operations.

- Remote Access: CLIs are commonly used for remote administration, enabling users to manage systems and applications over networks or through secure shell (SSH) connections.

Design Considerations

- Command Structure: Designing a clear and consistent syntax for commands to ensure ease of use and minimize the learning curve.

- Documentation: Providing comprehensive documentation, including command references, usage examples, and explanations of available options and parameters.

- Error Handling: Implementing informative error messages and proper feedback to guide users in correcting command input mistakes.

- Autocomplete and History: Including features that offer command autocompletion and command history recall to improve user efficiency and reduce errors.

- Help and Documentation Integration: Offering built-in help systems that provide contextual information and assistance for users.

While CLIs may require a learning curve and familiarity with command syntax, they remain an essential tool for power users and technical professionals. Their speed, flexibility, and scripting capabilities make them invaluable for system administration, programming, and automation tasks. Additionally, CLIs often coexist with GUIs, providing a complementary interface option for those seeking greater control and efficiency in their interactions with computers and software applications.

Voice Interfaces

Voice Interfaces utilize natural language processing and voice recognition technologies to enable users to interact with digital systems and devices using spoken commands or queries. With the rapid advancements in speech recognition and voice assistants, voice interfaces have gained significant popularity, offering hands-free and intuitive interactions. From virtual assistants in smartphones to smart speakers and voice-controlled appliances, voice interfaces are transforming the way we interact with technology.

Definition and Characteristics

- Voice Interfaces allow users to interact with digital systems, devices, or applications using spoken commands or queries.

- They employ speech recognition technology to convert spoken words into text and natural language processing algorithms to understand and interpret user intents.

- Responses to voice commands can be provided audibly through synthesized speech or displayed as text on a screen.

Key Components of Voice Interfaces

- Speech Recognition: The technology that converts spoken words or phrases into text, enabling the system to understand user input.

- Natural Language Processing (NLP): Algorithms that analyze and interpret the meaning and context of user commands or queries.

- Voice Assistant: A virtual assistant or AI-powered entity that interacts with users, performs tasks, provides information, and executes commands.

- Dialog Management: The ability of the voice interface to engage in multi-turn conversations and maintain context throughout the interaction.

- Text-to-Speech (TTS): The technology that converts text into synthesized speech for delivering audible responses.

Applications and Use Cases

- Virtual Assistants: Voice interfaces power virtual assistants like Siri, Google Assistant, Alexa, and Cortana, allowing users to perform tasks, ask questions, and receive personalized recommendations.

- Smart Speakers: Voice-controlled devices such as Amazon Echo, Google Home, or Apple HomePod enable users to control smart home devices, play music, access information, and more.

- Automotive Systems: Voice interfaces are integrated into car infotainment systems, allowing drivers to make hands-free calls, control audio playback, navigate, and access vehicle settings.

- Customer Support: Voice interfaces are employed in customer support systems, enabling users to interact with automated voice systems for queries, account management, and issue resolution.

Advantages of Voice Interfaces

- Hands-free Interaction: Voice interfaces provide a hands-free experience, allowing users to interact with technology while engaged in other activities or when their hands are occupied.

- Natural and Intuitive: Voice interfaces leverage humans’ natural language abilities, making interactions more natural and reducing the learning curve.

- Accessibility: Voice interfaces offer improved accessibility for individuals with mobility impairments or visual impairments.

- Convenience: Voice interfaces streamline interactions by eliminating the need for physical input devices or complex menu navigation.

Design Considerations

- Voice Recognition Accuracy: Striving for high accuracy in speech recognition to ensure that the system accurately understands user commands and queries.

- Contextual Understanding: Designing voice interfaces that can comprehend and maintain context in multi-turn conversations, providing more meaningful responses.

- Voice Feedback: Crafting synthesized speech responses that are clear, natural-sounding, and appropriate for the context.

- Error Handling: Implementing effective error handling strategies to guide users when the system cannot understand or fulfill a request.

Voice interfaces continue to evolve and improve, becoming more sophisticated and integrated into various devices and applications. As voice recognition technology advances, voice interfaces are set to play an increasingly prominent role in our daily lives, offering seamless and intuitive interactions with the digital world through the power of our voices.

Touch Interfaces

Touch Interfaces have revolutionized the way we interact with smartphones, tablets, kiosks, and other touch-enabled devices. With the rise of capacitive touchscreens, touch interfaces have become ubiquitous, providing users with intuitive and direct manipulation of digital content through touch gestures. From tapping and swiping to pinch-to-zoom, touch interfaces have transformed the way we navigate and engage with technology.

Definition and Characteristics

- Touch Interfaces enable users to interact with digital devices or systems by directly touching the screen or touch-sensitive surface.

- They utilize capacitive touch technology, which detects the electrical properties of human touch to register and respond to user input.

- Touch interfaces support various touch gestures, including tapping, swiping, pinching, rotating, and dragging, to perform actions and manipulate content.

Key Components of Touch Interfaces

- Touchscreen: The display surface that detects touch input and relays it to the device or system.

- Multi-Touch Gestures: Touch interfaces support multiple simultaneous touch points, allowing users to perform gestures like pinch-to-zoom, swipe gestures, and multi-finger interactions.

- Virtual Keyboards: On-screen keyboards that enable text input through touch, eliminating the need for physical keyboards.

- Haptic Feedback: Vibrations or tactile feedback provided by the device to simulate the sensation of pressing physical buttons or interacting with objects.

Applications and Use Cases

- Mobile Devices: Touch interfaces are prevalent in smartphones and tablets, enabling users to navigate apps, browse the web, play games, and perform various tasks.

- Kiosks and Self-Service Machines: Touch interfaces are commonly used in public places like airports, restaurants, and retail stores for self-service operations, such as ticketing, ordering, and information retrieval.

- Interactive Displays: Touch interfaces power interactive displays in museums, exhibitions, and educational settings, allowing users to explore and interact with digital content.

- Point-of-Sale Systems: Touch interfaces are integrated into cash registers and point-of-sale systems, facilitating efficient and intuitive transactions.

Advantages of Touch Interfaces

- Intuitive and Direct Manipulation: Touch interfaces provide a natural and intuitive way to interact with digital content, mimicking real-world interactions.

- Immediate Visual Feedback: Touch interfaces offer immediate visual feedback, with content responding in real-time to touch gestures, enhancing user engagement.

- Gesture-Based Interactions: Touch interfaces support a wide range of gestures, enabling users to perform complex actions quickly and effortlessly.

- Accessibility: Touch interfaces are accessible to individuals with various physical abilities, as they do not rely on fine motor skills or dexterity.

Design Considerations

- Touch Target Size: Designing touch interfaces with sufficiently large and easily tappable targets to accommodate different finger sizes and reduce errors.

- Gestural Clarity: Ensuring that touch gestures and their associated actions are clear and consistent across the interface to avoid confusion.

- Responsiveness: Optimizing touch interfaces for responsiveness and minimal latency to provide a smooth and seamless user experience.

- Visual Hierarchy: Designing interfaces with clear visual cues, highlighting touchable elements, and providing feedback when they are interacted with.

- Accessibility Features: Incorporating accessibility features like adjustable touch sensitivity, voice feedback, or assistive touch options for users with specific needs.

Touch interfaces have transformed the way we interact with digital devices, offering intuitive and engaging user experiences. As touch technology continues to evolve, incorporating features like pressure sensitivity and haptic feedback, touch interfaces are poised to play an even more significant role in our daily lives, shaping the way we navigate and interact with the digital world.

Augmented Reality (AR) and Virtual Reality (VR) Interfaces

Augmented Reality (AR) and Virtual Reality (VR) Interfaces immerse users in digitally enhanced or virtual environments, enabling interactive and immersive experiences. AR overlays digital content onto the real world, while VR creates a fully simulated virtual environment. Both AR and VR interfaces offer unique opportunities for entertainment, education, training, and various other domains, transforming the way we perceive and interact with the digital realm.

Definition and Characteristics

- Augmented Reality (AR): AR interfaces blend digital content, such as images, videos, or 3D models, with the real world, enhancing our perception and interaction with the physical environment.

- Virtual Reality (VR): VR interfaces create a fully immersive, computer-generated virtual environment that users can explore and interact with using specialized devices like headsets and controllers.

- Both AR and VR interfaces aim to provide a sense of presence and enable users to interact with digital objects and environments in natural and intuitive ways.

Key Components of AR and VR Interfaces

- Head-Mounted Displays (HMDs): Specialized devices worn on the head that deliver visual and auditory stimuli, creating an immersive AR or VR experience.

- Motion Tracking: Sensors and cameras that track the user’s movements and gestures, allowing them to interact with and manipulate digital objects within the AR or VR environment.

- Controllers: Hand-held devices, such as handheld wands or motion-tracked controllers, that enable users to interact with and manipulate virtual objects in the AR or VR space.

- Spatial Audio: Audio technology that provides three-dimensional sound, enhancing the sense of presence and immersion within the AR or VR environment.

Applications and Use Cases

- Entertainment and Gaming: AR and VR interfaces offer immersive gaming experiences, interactive storytelling, and engaging entertainment content.

- Training and Simulation: AR and VR interfaces are used for training simulations in fields such as healthcare, aviation, engineering, and military, providing realistic and safe environments for learning and practice.

- Design and Visualization: AR and VR interfaces enable architects, engineers, and designers to visualize and interact with 3D models, facilitating better spatial understanding and design iterations.

- Education and Learning: AR and VR interfaces enhance educational experiences by providing interactive and immersive content, enabling students to explore subjects in depth.

Advantages of AR and VR Interfaces

- Immersive Experiences: AR and VR interfaces provide users with deeply immersive experiences, transporting them to new digital realms or enhancing their perception of the real world.

- Interactive and Engaging: AR and VR interfaces offer interactive and engaging experiences, enabling users to manipulate and interact with digital objects and environments.

- Spatial Understanding: AR and VR interfaces facilitate better spatial understanding, allowing users to perceive and interact with digital content in three dimensions.

- Simulated Training: AR and VR interfaces provide safe and controlled environments for training simulations, reducing risks and costs associated with real-world training scenarios.

Design Considerations

- User Comfort: Designing AR and VR interfaces with considerations for user comfort, including ergonomic design, minimizing motion sickness, and addressing physical discomfort during prolonged use.

- User Interface and Interaction Design: Creating intuitive and user-friendly interfaces within the AR or VR environment, considering factors such as visual cues, spatial mapping, and gesture-based interactions.

- Realism and Immersion: Striving for realism in AR and VR environments through high-quality visuals, realistic physics, and accurate rendering of objects and environments.

- Performance Optimization: Optimizing AR and VR interfaces for smooth performance and low latency to maintain an immersive experience without distractions or lag.

Augmented Reality (AR) and Virtual Reality (VR) interfaces continue to push the boundaries of human-computer interaction, offering immersive and transformative experiences. As technology advances, AR and VR are expected to become increasingly integrated into various aspects of our lives, unlocking new possibilities for entertainment, education, training, and beyond.

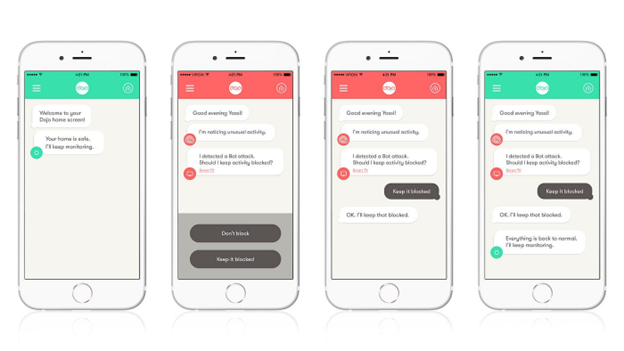

Conversational Interfaces

Conversational Interfaces enable users to interact with digital systems and devices through natural language conversations. These interfaces leverage technologies like natural language processing (NLP) and machine learning to understand user inputs, interpret intents, and provide appropriate responses. From chatbots and virtual assistants to voice-activated devices, conversational interfaces offer intuitive and engaging ways for users to interact with technology.

Definition and Characteristics

- Conversational Interfaces allow users to interact with digital systems using natural language conversations, typically in written or spoken form.

- They employ NLP algorithms to understand and interpret user inputs, extract meaning, and generate relevant responses.

- Conversational interfaces may be text-based, voice-based, or a combination of both.

Key Components of Conversational Interfaces

- User Input: Users provide inputs in the form of text or speech, expressing their intentions, queries, or commands.

- Natural Language Processing (NLP): NLP algorithms analyze and interpret user inputs, extracting meaning, and identifying key intents or entities.

- Dialog Management: Conversational interfaces engage in multi-turn conversations, maintaining context, and guiding the flow of the conversation.

- Response Generation: Based on user inputs, intents, and context, conversational interfaces generate appropriate responses, which can be in text or speech form.

- Language Understanding: Conversational interfaces employ techniques such as intent recognition, entity extraction, sentiment analysis, and context comprehension to understand user inputs.

Applications and Use Cases

- Virtual Assistants: Conversational interfaces power virtual assistants like Siri, Google Assistant, Alexa, and Cortana, providing information, performing tasks, and offering personalized recommendations.

- Chatbots: Conversational interfaces are used in chatbot applications for customer support, answering FAQs, providing automated assistance, and facilitating interactions in various industries.

- Voice-Activated Devices: Devices like smart speakers or voice-activated appliances leverage conversational interfaces to execute commands, answer questions, and control connected smart home devices.

- Messaging Apps: Conversational interfaces are integrated into messaging apps, enabling users to interact with businesses, make reservations, order products, and access services.

Advantages of Conversational Interfaces

- Natural and Intuitive: Conversational interfaces leverage human’s natural language abilities, making interactions more natural and reducing the learning curve.

- Accessibility: Conversational interfaces offer improved accessibility for individuals with limited mobility, vision impairments, or those who prefer verbal communication.

- Efficiency and Convenience: Conversational interfaces enable users to perform tasks, get information, or complete transactions through simple and direct conversations, saving time and effort.

- Personalization: Conversational interfaces can leverage user preferences, history, and context to offer personalized responses and recommendations.

Design Considerations

- Language Understanding and Context: Developing robust language understanding models to accurately interpret user inputs, considering context and user intent.

- Response Generation: Designing response generation models to generate coherent and contextually appropriate responses, focusing on clarity and naturalness.

- Error Handling and Clarification: Implementing effective error handling strategies and mechanisms to handle ambiguous or misunderstood user inputs.

- Personality and Tone: Incorporating appropriate personality and tone in conversational interfaces to create engaging and user-friendly experiences.

- User Privacy and Data Protection: Ensuring user privacy and data protection by implementing secure and compliant conversational interface systems.

Conversational interfaces are rapidly evolving, offering more sophisticated and personalized interactions with digital systems. As NLP and machine learning technologies advance, conversational interfaces will continue to enhance our daily lives, enabling seamless and intuitive conversations with technology and transforming the way we access information, accomplish tasks, and engage with digital services.

Gesture-Based Interfaces

Gesture-Based Interfaces allow users to interact with digital systems and devices through physical gestures, such as hand movements, body motions, or facial expressions. These interfaces leverage technologies like computer vision, depth sensing, and motion tracking to detect and interpret user gestures, enabling intuitive and immersive interactions. Gesture-based interfaces offer a unique way to engage with technology, providing hands-free and natural interactions.

Definition and Characteristics

- Gesture-Based Interfaces enable users to interact with digital systems using physical gestures instead of traditional input devices like keyboards or mice.

- They utilize technologies like computer vision, depth sensing, or wearable sensors to detect and interpret user gestures accurately.

- Gesture-based interfaces can recognize a wide range of gestures, including hand movements, body gestures, facial expressions, or even eye tracking.

Key Components of Gesture-Based Interfaces

- Gesture Recognition: Technology that detects and recognizes specific gestures performed by users, translating them into meaningful input for digital systems.

- Motion Tracking: Sensors or cameras that track the movements of the user’s hands, body, or face, capturing gestures and translating them into digital input.

- Machine Learning: Gesture-based interfaces often incorporate machine learning algorithms to improve gesture recognition accuracy and adapt to individual user patterns.

- Feedback Mechanisms: Gesture-based interfaces provide visual or haptic feedback to confirm the recognition of gestures or to guide users during interactions.

Applications and Use Cases

- Gaming and Entertainment: Gesture-based interfaces enhance gaming experiences, allowing users to control characters or interact with virtual environments through natural movements.

- Virtual Reality (VR) and Augmented Reality (AR): Gesture-based interfaces enhance immersion in VR or AR environments, enabling users to interact with virtual objects using gestures.

- Smart TVs and Home Entertainment Systems: Gesture-based interfaces are used to control televisions, media players, and other home entertainment devices, offering hands-free and intuitive interactions.

- Public Displays and Interactive Installations: Gesture-based interfaces power interactive exhibits, public displays, and interactive installations, enabling users to navigate content through gestures.

Advantages of Gesture-Based Interfaces

- Intuitive and Natural Interactions: Gesture-based interfaces mimic natural human movements, making interactions more intuitive and reducing the learning curve.

- Hands-Free Control: Gesture-based interfaces offer hands-free interactions, allowing users to control digital systems without physical contact or the need for traditional input devices.

- Immersive Experiences: Gesture-based interfaces enhance immersion, enabling users to engage with digital content more directly and physically.

- Accessibility: Gesture-based interfaces provide alternative interaction methods, benefiting individuals with mobility impairments or those who may struggle with traditional input devices.

Design Considerations

- Gesture Recognition Accuracy: Ensuring accurate and reliable gesture recognition to interpret user gestures correctly and minimize false positives or false negatives.

- Gesture Mapping: Designing gesture sets and mapping them to specific actions or functionalities, ensuring consistency and avoiding ambiguity in gesture-based interactions.

- User Calibration and Personalization: Allowing users to calibrate gesture-based interfaces to their own physical abilities and preferences.

- User Feedback: Providing clear visual or haptic feedback to confirm gesture recognition, ensuring users understand when their gestures have been recognized.

- Environmental Considerations: Considering environmental factors such as lighting conditions, space limitations, or potential occlusions that may affect gesture detection and recognition.

- Gesture-based interfaces offer exciting possibilities for intuitive and immersive interactions with technology. As gesture recognition technologies advance and become more accurate, gesture-based interfaces will continue to transform how we engage with digital systems, offering a more natural and physically engaging way to interact with the digital world.

Natural Language Processing Interfaces

Natural Language Processing (NLP) Interfaces enable users to interact with digital systems and applications using natural language. These interfaces leverage NLP technologies to understand, interpret, and respond to user inputs in a way that mimics human conversation. NLP interfaces facilitate seamless and intuitive interactions, allowing users to communicate with technology in a more human-like manner.

Definition and Characteristics:

- NLP Interfaces enable users to interact with digital systems using natural language, such as spoken or written conversations.

- They leverage NLP algorithms to understand and interpret user inputs, extract meaning, and generate appropriate responses.

- NLP interfaces aim to provide conversational interactions that mimic human conversation patterns and language understanding.

Key Components of NLP Interfaces

- Language Understanding: NLP algorithms analyze user inputs, identifying intents, and entities, and extracting meaning from the text or speech.

- Intent Recognition: NLP interfaces determine the purpose or intention behind user inputs, enabling the system to understand what the user wants to achieve.

- Entity Extraction: NLP interfaces identify specific entities or pieces of information mentioned in user inputs, such as names, dates, or locations.

- Dialog Management: NLP interfaces engage in multi-turn conversations, maintaining context, and guiding the flow of the conversation.

- Natural Language Generation: Based on user inputs, intents, and context, NLP interfaces generate appropriate responses in a natural and coherent manner.

Applications and Use Cases

- Virtual Assistants: NLP interfaces power virtual assistants like Siri, Google Assistant, Alexa, and Cortana, providing information, performing tasks, and offering personalized recommendations.

- Chatbots and Customer Support: NLP interfaces are used in chatbot applications for customer support, answering FAQs, providing automated assistance, and facilitating interactions in various industries.

- Voice-Activated Devices: Devices like smart speakers or voice-activated appliances leverage NLP interfaces to execute commands, answer questions, and control connected smart home devices.

- Language Translation: NLP interfaces are employed in language translation tools and applications, enabling users to communicate across different languages.

Advantages of NLP Interfaces

- Natural and Conversational Interactions: NLP interfaces leverage human-like conversations, making interactions more intuitive and reducing the learning curve.

- Contextual Understanding: NLP interfaces comprehend and maintain context in multi-turn conversations, allowing for more meaningful and coherent interactions.

- Personalization: NLP interfaces can leverage user preferences, history, and context to offer personalized responses and recommendations.

- Increased Efficiency: NLP interfaces enable users to perform tasks, get information, or complete transactions through natural language conversations, saving time and effort.

Design Considerations

- Language Understanding and Context: Developing robust language understanding models to accurately interpret user inputs, considering context and user intent.

- Response Generation: Designing response generation models to generate coherent and contextually appropriate responses, focusing on clarity and naturalness.

- Error Handling and Clarification: Implementing effective error handling strategies and mechanisms to handle ambiguous or misunderstood user inputs.

- Personality and Tone: Incorporating appropriate personality and tone in NLP interfaces to create engaging and user-friendly experiences.

- User Privacy and Data Protection: Ensuring user privacy and data protection by implementing secure and compliant NLP interface systems.

NLP interfaces empower users to communicate with digital systems more naturally and conversationally. As NLP technologies advance, these interfaces will continue to improve, offering more accurate language understanding and generating more human-like responses. NLP interfaces have the potential to transform how we interact with technology, making it more accessible, engaging, and user-friendly.

Machine Learning and Computer Vision Interfaces

Machine learning and computer vision are two closely related fields that have seen significant advancements in recent years. Machine learning focuses on developing algorithms and models that can learn from data and make predictions or decisions, while computer vision deals with the extraction, analysis, and understanding of visual information from images or videos. To effectively apply machine learning techniques to computer vision problems, appropriate interfaces and frameworks are essential. In this article, we will explore the interfaces and frameworks that facilitate the integration of machine learning and computer vision.

OpenCV

OpenCV (Open Source Computer Vision Library) is a popular open-source library that provides a wide range of computer vision algorithms and functions. It also offers interfaces to integrate machine learning models into computer vision applications. OpenCV supports various machine learning frameworks such as TensorFlow, PyTorch, and Caffe, allowing developers to leverage pre-trained models or train their own models for computer vision tasks. The integration of machine learning and computer vision in OpenCV enables tasks such as object detection, image segmentation, and facial recognition.

TensorFlow

TensorFlow is a powerful machine learning framework developed by Google. It provides a comprehensive ecosystem for building and deploying machine learning models. TensorFlow includes a module called TensorFlow Object Detection API, which offers pre-trained models and tools for training custom object detection models. These models can be used in computer vision applications to detect and classify objects in images or videos. TensorFlow also provides support for other computer vision tasks such as image segmentation and image generation.

PyTorch

PyTorch is another popular machine learning framework that has gained significant traction in the research community. It provides a dynamic computational graph and a flexible interface for building and training deep learning models. PyTorch offers various computer vision libraries and modules, such as torchvision, which provides pre-trained models and datasets for computer vision tasks. These models can be easily integrated into computer vision applications to perform tasks such as image classification, object detection, and semantic segmentation.

Keras

Keras is a high-level neural networks API written in Python. It provides a user-friendly interface for building and training deep learning models. Keras supports integration with various machine learning frameworks, including TensorFlow and Theano. It offers pre-trained models for computer vision tasks such as image classification and object detection. Keras allows developers to quickly prototype and deploy machine learning models in computer vision applications.

Other Interfaces

Wearable Interfaces

Wearable interfaces refer to user interfaces that are integrated into wearable devices, such as smartwatches, fitness trackers, or smart clothing. These interfaces allow users to interact with the device and access its functionalities. Wearable interfaces can include touchscreens, buttons, voice commands, or even fabric-based interfaces. They enable users to control and interact with the wearable device conveniently.

Haptic Interfaces

Haptic interfaces involve the use of touch or tactile feedback to provide interaction between the user and a device. These interfaces utilize vibrations, force feedback, or other tactile sensations to convey information or simulate physical interactions. Haptic interfaces can be found in devices such as smartphones, gaming controllers, or virtual reality systems. They enhance the user experience by providing a sense of touch and immersion.

Brain-Computer Interfaces (BCIs)

Brain-Computer Interfaces (BCIs) establish a direct communication pathway between the brain and an external device. These interfaces enable users to control devices or interact with them using their brain activity. BCIs can be used for various applications, including assistive technologies for individuals with disabilities or for controlling devices in virtual reality environments. They rely on technologies such as electroencephalography (EEG) to detect and interpret brain signals.

Biometric Interfaces

Biometric interfaces utilize biometric data, such as fingerprints, facial recognition, or iris scans, to authenticate and identify users. These interfaces are commonly used in security systems, smartphones, or access control systems. Biometric interfaces provide a convenient and secure way to verify the identity of individuals.

Web Interfaces

Web interfaces are user interfaces that allow users to interact with websites or web applications. These interfaces are typically accessed through web browsers and can include elements such as menus, buttons, forms, and multimedia content. Web interfaces enable users to navigate, interact, and consume information on the internet.

Mobile Interfaces

Mobile interfaces are user interfaces designed specifically for mobile devices, such as smartphones or tablets. These interfaces are optimized for smaller screens and touch-based interactions. Mobile interfaces often include gestures, swipes, taps, and other touch-based interactions to navigate and interact with mobile applications.

Desktop Interfaces

Desktop interfaces are user interfaces designed for desktop computers or laptops. These interfaces typically involve the use of a keyboard, mouse, or trackpad for input, along with a monitor or display for output. Desktop interfaces provide a wide range of interaction options, including keyboard shortcuts, menus, windows, and multi-tasking capabilities.

Game Interfaces

Game interfaces are user interfaces specifically designed for video games. These interfaces can include on-screen menus, heads-up displays (HUDs), control schemes, and other elements that allow players to interact with the game. Game interfaces are designed to provide an immersive and intuitive gaming experience.

Automotive Interfaces

Automotive interfaces are user interfaces found in vehicles, such as cars or trucks. These interfaces include controls for the vehicle’s entertainment system, climate control, navigation, and other features. Automotive interfaces can be physical buttons, touchscreens, voice commands, or a combination of these input methods.

Industrial Interfaces

Industrial interfaces are user interfaces used in industrial settings, such as factories or manufacturing plants. These interfaces are designed to control and monitor industrial processes and equipment. Industrial interfaces can include physical buttons, switches, touchscreens, or specialized control panels.

Internet of Things (IoT) Interfaces

Internet of Things (IoT) interfaces enable users to interact with IoT devices and systems. These interfaces can be accessed through smartphones, web browsers, or dedicated applications. IoT interfaces allow users to monitor and control connected devices, access data, and configure settings remotely.

Conclusion

In this blog post, we have delved into the world of interfaces and explored various types that are shaping the way we interact with technology. By understanding and exploring these different types of interfaces, we can anticipate the future of technology and how we interact with it. Whether it’s the immersive metaverse, seamless UX, inconspicuous wearables, or fabric-based interfaces, interfaces continue to evolve and shape our digital experiences.

FAQs about Types of Interface

- What are the different types of user interfaces?

The different types of user interfaces include graphical user interfaces (GUI), touchscreen interfaces, tangible user interfaces, kinetic user interfaces, natural user interfaces, and organic user interfaces.

- What are the three types of user interfaces based on sensory input?

The three types of user interfaces based on sensory input are visual interfaces, auditory interfaces, and nonvisual interfaces.

- What is the metaverse interface?

The metaverse interface can be classified into four types: environment, interface, interaction, and social value. It plays a crucial role in defining the metaverse experience.

- What is the purpose of wearable interfaces?

Wearable interfaces allow users to interact with wearable devices, such as smartwatches or fitness trackers. They can include touchscreens, buttons, voice commands, or fabric-based interfaces.

- What are some examples of user interfaces in augmented reality (AR)?

User interfaces in AR can vary depending on the specific AR scenario or application. Examples of AR interfaces include visual overlays, gesture-based interactions, and information displays.